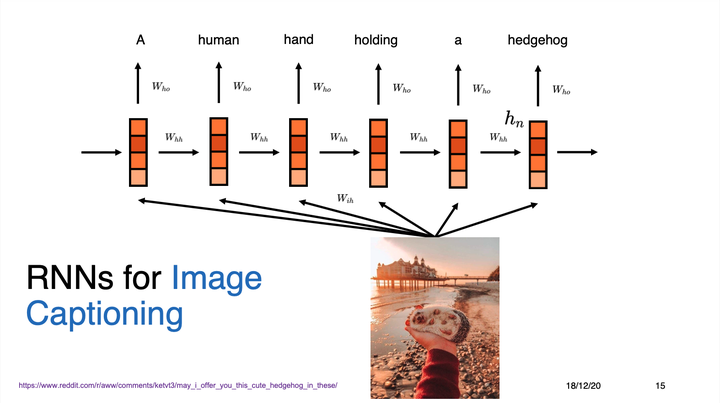

In this talk, I present a chronological overview of modeling solutions for time series and natural language. I go through seminal papers on the applications of recurrent models to tasks like language modeling, neural machine translation, image captioning, or multi-model text generation. Next, I describe the advantages and shortcomings of RNNs, motivating why the latter brought to the advent of the Attention mechanism. I conclude with a brief introduction to the Transformer architecture.

The talk is part of Research Bites, the series of research-oriented seminaries we organized for students of the course Data Science Lab: process and methods. The other talks in the series were given by Andrea Pasini (on Image Segmentation), Francesco Ventura (on Explainable AI), Eliana Pastor (on ML pipelines in production), Flavio Giobergia (on word embeddings), and Moreno La Quatra (on GANs).